DeepFaceEditing: Deep Face Generation and Editing with Disentangled Geometry and Appearance Control

Shu-Yu Chen1,2 * Feng-Lin Liu1,2 * Yu-Kun Lai3 Paul L. Rosin3 Chunpeng Li1,2 Hongbo Fu4 Lin Gao1,2 †

1 Institute of Computing Technology, Chinese Academy of Sciences

2 University of Chinese Academy of Sciences

3 Cardiff University

4 City University of Hong Kong

* Authors contributed equally

† Corresponding author

Accepted by Siggraph 2021

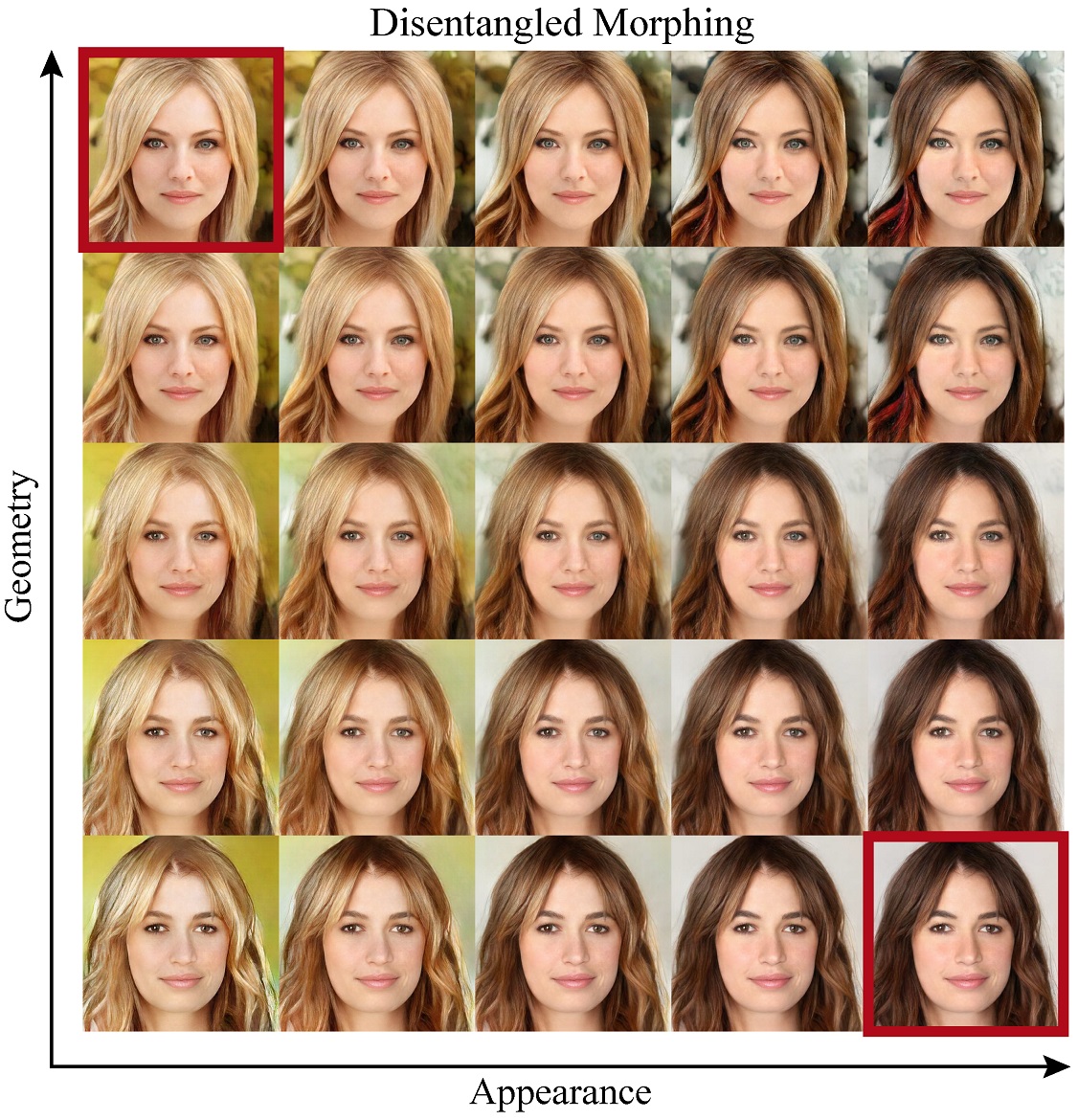

Figure:Our DeepFaceEditing method allows users to intuitively edit a face image to manipulate its geometry and appearance with detailed control. Given a portrait image (a), our method disentangles its geometry and appearance, and the resulting representations can faithfully reconstruct the input image (d). We show a range of flexible face editing tasks that can be achieved with our unified framework: (b) changing the appearance according to the given reference images while retaining the geometry, (c) replacing the geometry of the face with a sketch while keeping the appearance, (e) editing the geometry using sketches, and (f) editing both the geometry and appearance. The inputs used to control the appearance and geometry are shown as small images with green and orange borders, respectively.

Abstract

Recent facial image synthesis methods have been mainly based on conditional generative models. Sketch-based conditions can effectively describe the geometry of faces, including the contours of facial components, hair structures, as well as salient edges (e.g., wrinkles) on face surfaces but lack effective control of appearance, which is influenced by color, material, lighting condition, etc. To have more control of generated results, one possible approach is to apply existing disentangling works to disentangle face images into geometry and appearance representations. However, existing disentangling methods are not optimized for human face editing, and cannot achieve fine control of facial details such as wrinkles. To address this issue, we propose DeepFaceEditing, a structured disentanglement framework specifically designed for face images to support face generation and editing with disentangled control of geometry and appearance. We adopt a local-to-global approach to incorporate the face domain knowledge: local component images are decomposed into geometry and appearance representations, which are fused consistently using a global fusion module to improve generation quality. We exploit sketches to assist in extracting a better geometry representation, which also supports intuitive geometry editing via sketching. The resulting method can either extract the geometry and appearance representations from face images, or directly extract the geometry representation from face sketches. Such representations allow users to easily edit and synthesize face images, with decoupled control of their geometry and appearance. Both qualitative and quantitative evaluations show the superior detail and appearance control abilities of our method compared to state-of-the-art methods.

Pipeline

An overview of our framework. Our pipeline leverages a local-to-global strategy. The upper-right shows an illustration of a Local Disentanglement (LD) module for ''right eye'', which uses $\mathcal{E}_{image}$ (when using a geometry reference image) or $\mathcal{E}_{sketch}$ (when using a sketch for controlling the geometry) to extract the geometry features and $\mathcal{E}_{appearance}$ to extract the appearance features. We use a swapping strategy to generate a new image $I^\prime$ with the geometry of $I_1$ and appearance of $I_2$. Taking the generated image $I^\prime$ as the appearance reference, along with the geometry of $I_2$, should lead to the reconstructed image $I_2^\prime$ that ideally matches $I_2$, allowing us to utilize a cycle loss to regularize training. Note that in our LD modules, such swapping and cycle constraints are applied to local regions corresponding to ''left eye'', ''right eye'', ''nose'', ''mouth'', and ''background''. The required geometry information can be explicitly given by a sketch or implicitly given by a reference image.

Application

A screenshot of our sketch-based interface for face editing. (a) and (b) are an input sketch overlaid on top of a geometry image, and the corresponding generated face image, respectively. (c) and (d) are the control function panel and the appearance image list, respectively.

Paper

[Comming soon]

Code

|

|

BibTex

author = {Chen, Shu-Yu and Liu, Feng-Lin and Lai, Yu-Kun and Rosin, Paul L. and Li, Chunpeng and Fu, Hongbo and Gao, Lin},

title = {{DeepFaceEditing}: Deep Face Generation and Editing with Disentangled Geometry and Appearance Control},

journal = {ACM Transactions on Graphics (Proceedings of ACM SIGGRAPH 2021)},

year = {2021},

volume = 40,

pages = {90:1--90:15},

number = 4

}

|

Last updated on May, 2021. |